Proving Video Authenticity in the Age of AI Using Open Timestamp Records

Background

Research and development of video generation models such as Google’s Veo and OpenAI’s Sora have brought forth the increasing complications in the near future to prove authenticity of video evidence. While identification mechanisms for Artificial Intelligence (AI) generated content such as watermarking are being researched, the open nature of AI development makes it practically impossible to ensure verifiable watermarking for all AI generated content. This raises the importance of authenticating non-AI generated content by default in order to enable authenticity checks in the future.

Timestamp Authorities

Timestamp authorities have been used to verify the existence of data at some point in time. These are usually privately operated services that offer servers to store records of document creation and modification. Roughly 1.2 trillion digital pictures were estimated to be taken in 2017 (Statista, 2017). This number would most likely increase as the global population grows and access to media-recording devices (cameras, smartphones, etc) becomes more widespread. Verifying media globally through privatized timestamp authorities will not scale as it will incur massive costs that will become a hindrance to the widespread adoption of media timestamp records.

Authenticating Media Existence at a Global Scale Through Open Source

Free timestamp authorities exist (e.g. freeTSA.org), but this too will not scale due to its closed nature. OpenTimestamps is an open source solution that enables publicly-verifiable timestamp records of cryptographic hashes on the Bitcoin blockchain. The distributed and zero-trust nature of the Bitcoin blockchain makes it an interesting candidate as a data timestamp authority at a global scale. More information on how OpenTimestamps works can be read in this article by Peter Todd: OpenTimestamps: Scalable, Trust-Minimized, Distributed Timestamping with Bitcoin.

Implementing a frictionless timestamp recording process

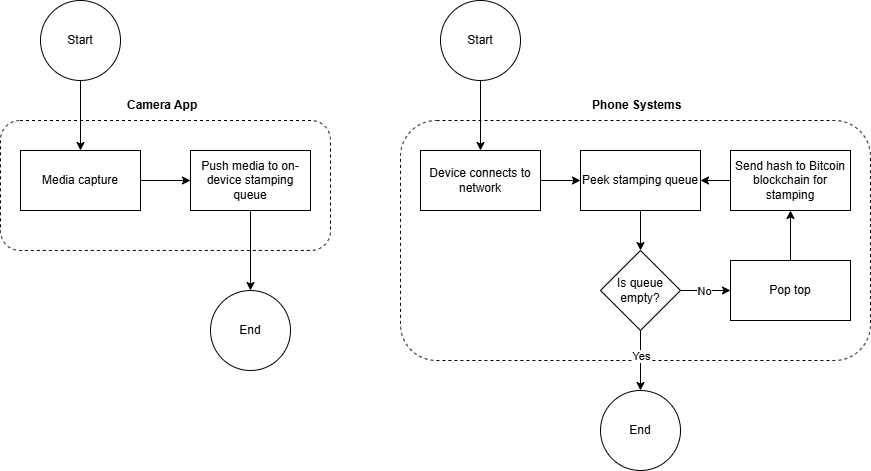

The stamping process should be a passive action done by the device without interaction by the user (with prior consent). Here is a high level design of how the media-capturing device will interact with the open timestamp software.

Shortcomings

The potential of tampering prevents the possibility of authenticating media on-device. Thus, a connection to some network that can access the Bitcoin blockchain for stamping is required in order to immortalize the hash at some timestamp. The resulting timestamp will also not be accurate to the actual creation of the media since the media-capture device might not immediately have network access. Furthermore, the actual media hash will be embedded inside a bitcoin block, this means that the resulting timestamp will correspond to which block the hash ends up in, whenever that may be. The existence of a hash at some point in time does not ultimately prove the media in question is genuine and not fabricated, it simply proves that the media matching the hash existed at the time and was not modified after the fact.

Conclusion

The adoption of stamping media will not be the ultimate indicator of what is AI generated or not, but it will provide supporting evidence in the case AI generated imagery is created after an incident in order to manipulate a certain narrative. Further research and widespread adoption in watermarking both in source data and models are needed. Regulation and awareness of AI generated media also needs to be pushed to government and the legal processes around the globe.

Last modified: 2025-06-19